With the proliferation of Microservices, a Contract-1st approach keeps API changes consistent while an API Gateway keeps API operations under control.

The weekly mood

This is just another office day without too much project activity, so that i have enough time to review pre-defined team tracks, as well as my past achievements and future directions.

I am now looking at our API Architecture Re-design. While our Platform based on Microservices architecture grew with the time, we happen to have quite a lot of heterogeneous APIs. This characteristic tends to become hard to manage and to maintain. Therefore we started a dedicated initiative.

Why is API a hot topic?

In a nutshell, an Application Programming Interface (API) is a generic facade that enables modularity and integration between software applications and components, independently from their technologies.

In an API driven economy (aka. API Economy), APIs are actually the main point of interaction between internal applications (private API), business entities (partner API), end-users (public API) customers and (IoT-)devices. The practice of billing for the use of an external API (ex. in Cloud computing services) is called API Monetization. There are also free-of-charge APIs with the goal of collecting information from Smart devices, increasing regulatory compliance (ex. Solvency II and PSD2 directives) or transparency (ex. Open data). There is a great API use-case study available here.

However, unlike other business interfaces like for instance Peer-to-Peer (ex. EDI Applicability Statement, Bitcoin network), an API endpoint typically has a higher potential for exposure and sharing across many consumers. Public APIs therefore follow strict requirements around security (ex. OWASP), availability (ex. SLA), stability (ex. client version compatibility), model simplicity and consistency (ex. Developer eXperience).

There are 2 main categories and a number of API Styles:

- Operation-based

- Remote Procedure Call ex. XML-RPC, (Google) gRPC

- Ditributed Object ex. CORBA, (Microsoft) COM

- Web Service ex. CGI, SOAP, WebHook, WebSocket

- Resource-based

- "REST-like" ex. Active Server Pages (ASP), Jakarta Servlets (JSP), (Microsoft) OData

- RESTful ex. OAS, (Facebook) GraphQL, (Netflix) Falcor

Currently the most popular style is REST. Its statelessness is aligned with distributed systems requirements, and its HTTP root delivers enough performance and convenience for most developers and clients (ex. Web-browser, Mobile device). However, REST is neither a standard nor a best option. So we might see specifications and alternatives grow in the future.

API industrialisation

According to API-University, an API Architecture should consider the following three building blocks:

- API Development

- API Gateway

- API Management (APIM)

It might be worth adding a 4th block for API Operations (APIOps).

Obviously we don't want to "re-invent the wheel" at developing each part of the API architecture from scratch. Our approach is to look at existing frameworks and products instead, and eventually select those fitting to our custom needs. Each solution might call itself a platform and provide a range of features, among those some good and some "less good" ones. From my experience, this is typically the case of commercial offering with high vendor lock-in, like for example those from major Cloud providers.

In this article, we set the focus on API Development and API Gateway.

API Development supports creating and publishing web application programming interfaces. There is a great manifest of the API developer mindset available here.

There are 2 main development activities:

- API Design via Contract-First Approach

- API Implementation via Operable Microservice

We will show how developers shall publish and consume APIs as part of a future post dedicated to API Management.

API Design

A Contract-First Approach requires to Design the API before implementing the logic, and not the other way around, so that the specification can be considered as the single-version-of-the-truth across the whole API life-cycle. The definition should be encoded in a standard descriptive format like for example Open API Specification (OAS) or RAML in the case of REST, and centralized in a Version Control System (VCS) like for example GitHub.

Source: OpenAPIs Initiative (OAI)

In order to stick to consistent Design Principles (ex. REST API Standards), a visual modelling tool including support for templating, versioning and publishing can be an enabler for better development productivity, transparency and quality. There are a number of open-source candidates including Google Postman, Restlet Studio, Swagger Editor, Kong Insomnia.

The design phase allows for exposing an API Mock or dummy implementation, along with the automatically built API Documentation. Those can be consumed in parallel by testers, users and developers, along with the specification.

API Implementation

Remember that developers and operators need a lean experience, and that the API Implementation logic is much more than an endpoint able to accept an input, compute it and reply with an output. Indeed, some common libraries should be integrated to the project for managing consistent request validation, versioning, response format, paging, filtering, sorting, cacheability, observability etc. This kind of Operability is achieved for example in the Java world with a Spring Boot Microservice.

Since I am too lazy to model a new API from scratch, we'll start with an existing OAI example: (Basic) Petstore API in OASv3 format.

We can download or directly import the specification from file URL using Insomnia.

We will create our server stub using the Java-based client OpenAPITools/openapi-generator.

$ wget https://repo1.maven.org/maven2/org/openapitools/openapi-generator-cli/5.0.0-beta/openapi-generator-cli-5.0.0-beta.jar -O openapi-generator-cli.jar

$ export JAVA_HOME=/usr/lib/jvm/default-java/

$ export PATH=${JAVA_HOME}/bin:$PATH

$ mkdir openapi-petstore-dummy

$ cd openapi-petstore-dummy

Let us see how to generate a Java-based Microservice. Spring Boot makes it easy to create stand-alone, production-grade Spring based Applications that you can "just run" with minimal configuration.

$ java -jar ../openapi-generator-cli.jar list ... SERVER generators: spring ...

We can actually do it right from the API specification, as per the API Design-First approach.

$ java -jar ../openapi-generator-cli.jar generate \ -i ../petstore-oas3.yaml -g spring -o . $ mvn clean install $ java -jar target/openapi-spring-1.0.0.jar /\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \ ( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \ \\/ ___)| |_)| | | | | || (_| | ) ) ) ) ' |____| .__|_| |_|_| |_\__, | / / / / =========|_|==============|___/=/_/_/_/ :: Spring Boot :: (v2.0.1.RELEASE) ... PI2SpringBoot : Started OpenAPI2SpringBoot in 3.298 seconds (JVM running for 3.653)

Our endpoint is now available at http://localhost:8080 along with a Swagger documentation.

Of course our app is nearly empty, but a good starting point. If you are interested in a more complete and realistic example, there is a reference implementation (based on an extended version of the Petstore API specification) including further http verbs and resources, mock data, and a security key.

$ cd ..

$ git clone git@github.com:OpenAPITools/openapi-petstore.git

$ Cloning into 'openapi-petstore'...

$ mvn spring-boot:run

...

$ curl -H 'api_key: special-key' "http://localhost:8080/v3/store/inventory"

{"sold":1,"pending":2,"available":7}

I ran into a ClassNotFoundException using OpenJDK11 at server start, which i managed to fix within the Maven POM as follow:

$ sed -i 's|</dependencies>| \

<dependency> \

<groupId>javax.xml.bind</groupId> \

<artifactId>jaxb-api</artifactId> \

<version>2.3.1</version> \

</dependency> \

<dependency> \

<groupId>com.sun.xml.bind</groupId> \

<artifactId>jaxb-core</artifactId> \

<version>2.3.0.1</version> \

</dependency> \

<dependency> \

<groupId>com.sun.xml.bind</groupId> \

<artifactId>jaxb-impl</artifactId> \

<version>2.3.1</version> \

</dependency> \

</dependencies>|' pom.xml The project also provides a convenient Dockerfile for embedding the produced JAR-file. This might not the recommended approach for Dockerizing a Spring Boot application for production, but we are in a PoC. So let us deploy our Petstore Microservice application to a Kubernes cluster, using a simple Deployment, including 2 Pods, 1 Service and 1 Ingress.

$ cat << EOF > petstore-svcdepl.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: petstore-deployment

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: petstore

replicas: 2

template:

metadata:

labels:

app: petstore

spec:

containers:

- name: petstore-worker

image: openapitools/openapi-petstore

ports:

- containerPort: 8080

env:

- name: OPENAPI_BASE_PATH

value: "/v3"

---

kind: Service

apiVersion: v1

metadata:

name: petstore-service

spec:

selector:

app: petstore

type: LoadBalancer

ports:

- name: petstore-port

port: 80

targetPort: 8080

EOF

$ kubectl -n default apply -f petstore-svcdepl.yaml

deployment.apps/petstore-deployment created

service/petstore-service createdYou can now issue a service request as follow:

$ curl -H "api_key: special-key" \

"http://$( \

kubectl get service/petstore-service \

| tail -1 | awk '{print $3}' \

)/v3/store/inventory"

{"sold":1,"pending":2,"available":7}In a Microservices architecture, the API Gateway is a central reverse-proxy de-charging individual services from implementing their own logic and rules around network, security and logging. It allows to reduce the number of public URL-domains and certificates used in an organisation. Because it controls API access via configuration rules applied dynamically at runtime, without the need to implement or change code, it is often referred to as a "smart proxy". It is also a potential single-point-of-failure (for the global API architecture) that should therefore support strong performance and resiliency.

Source: The role of an API Gateway by Express GW

The API Gateway tends to have a growing set of responsibilities including:

- Proxying multiple API resources through a single endpoint

- Routing and Load-balancing incoming trafic

- Authentication (aka. "AuthN") to an Identity Provider (IdP, ex. OAuth0) Authorization (aka. "AuthZ") via access control flow (ex. OAuth2).

- Certificate and Key-Management

- Enforcing API usage policies ex. Caching, Rate limiting/Throttling, Content encryption

- Logging and Monitoring

In the context of Kubernetes, Gateway configurations usually consist in both YAML files stored on the cluster and a Gateway database. Changes can be made via specific client, an API or an Administration UI. Best open-source candidates are Kong, Gloo, Ambassador, Umbrella, Tyk, KrakenD, Contour.

What is Kong

We started with Kong Gateway Open-Source, a popular project developed by Kong Inc. (formerly Mashape). It is built on top of Nginx with an object-oriented model, a pluggable architecture and a number of usefull extensions written in Go or Lua programming language. Kong nicely integrates in Kubernetes via a dedicated Ingress Controller.

In Kong Gateway Enterprise (commercial offering), Kong provides a Developer Portal (Kong Dev Portal) and an Administration UI (Kong Manager). For the first, we will evaluate it later on provided that we decide to register for trial or subscribe for technical support. For the second, there is an unofficial alternative for free: Konga.

The functional split between Open-Source and Enterprise version is highlighted from the module page.

Source: Kong Plugin hub

Kong setup

Although a minimal resource descriptor like the one available here is sufficient for deploying Kong in Kubernetes, we will look at a Helm chart that supports advanced configuration, in order to include a PostgreSQL Database and activate the Kong administration API.

$ helm repo add kong https://charts.konghq.com

$ helm repo update

# Helm 2

$ helm install kong/kong --name kong \

--set admin.enabled=true \

--set admin.http.enabled=true \

--set admin.ingress.enabled=true \

--set env.database=postgres \

--set env.pg_user=kong \

--set env.pg_database=kong \

--set postgresql.enabled=true \

--set postgresql.postgresqlUsername=kong \

--set postgresql.postgresqlDatabase=kong \

--set postgresql.service.port=5432

The Helm release starts 3 pods and 4 containers:

- kong:

- proxy: Nginx endpoint listening to all incoming trafic on TCP ports 8080/8443

- ingress-controller: Incoming trafic router as per Kubernetes controller manager

- postgresql: standard database chart

- init-migrations: init container that bootstraps the database

All configuration changes to the Gateway are processed by the Ingress Controller container. Those can be triggered via 3 different ways:

- Request to Kong administration API

- Instantiation of Kubernetes Ingress (a route to services that is automatically managed by Kong ingress-controller unless some specific IngressClass is used)

- Instantiation of Kong Custom Resource

- KongIngress supports creation and advanced configuration of Ingress/Service objects via abstract parameters Route, Upstream and Proxy. For example, fine-grained routing (ex. by HTTP verb) is not possible in Kubernetes standard ingress. Mapping between Ingress/Service and KongIngress objects is done via Kong 'override' annotation.

- KongPlugin supports configuration of Plugins via plugin-specific parameters. Related rules may apply globally at controller level, or locally at Ingress/Service level. Mapping between Ingress/Service and KongPlugin objects is done via Kong 'plugin' annotation.

Note that Kong also supports auto-scaling accross multiple Kubernetes nodes via Service-mesh / Control plane. Kong uses Kuma by default but an example with Istio is also available here.

Kong API discovery

Our service from above is automatically exposed by Kong Gateway as soon as we create either a regular Ingress or a KongIngress pointing to it:

$ cat << EOF > petstore-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: petstore-ingress

spec:

rules:

- host: petstore.host

http:

paths:

- path: /

backend:

serviceName: petstore-service

servicePort: 80

EOF

$ kubectl -n default apply -f petstore-ingress.yaml

ingress.extensions/petstore-ingress created

$ export KONG_PROXY_URL="http://$(kubectl describe svc kong-kong-proxy | grep Endpoints: | grep 8000 | awk '{print $2}')"

$ curl -H "Host: petstore.host" -H "api_key: special-key" \

"$KONG_PROXY_URL/v3/store/inventory"

{"sold":1,"pending":2,"available":7}Note that Insomnia plugin Inso allows for generating both Ingress and KongIngress ressource descriptors from OAS3 specification, which eventually helps for deployment and lifecycle automation.

$ curl -sL https://deb.nodesource.com/setup_14.x | sudo -E bash -

$ sudo apt-get install nodejs node-pre-gyp

$ npm install -g insomnia-inso

$ inso generate config petstore-oas3.yaml -t kubernetes

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: swagger-petstore-0

spec:

rules:

- host: petstore.swagger.io

http:

paths:

- path: /v1/.*

backend:

serviceName: swagger-petstore-service-0

servicePort: 80Obviously there are a lot of more advanced scenarios than a simple route to consider, as reflected by the huge number of different tutorials available here.

Kong Administration API

The API can be instanciated via cURL:

$ export KONG_ADMIN_URL="http://$(kubectl describe svc kong-kong-admin | grep Endpoints: | grep 8001 | awk '{print $2}')"

$ curl $KONG_ADMIN_URL/status

{"database":{"reachable":true},"memory":{"workers_lua_vms":[{"http_allocated_gc":"41.90 MiB","pid":23}],"lua_shared_dicts":{"kong_locks":{"allocated_slabs":"0.06 MiB","capacity":"8.00 MiB"},"kong":{"allocated_slabs":"0.04 MiB","capacity":"5.00 MiB"},"kong_process_events":{"allocated_slabs":"0.07 MiB","capacity":"5.00 MiB"},"kong_db_cache_miss":{"allocated_slabs":"0.08 MiB","capacity":"12.00 MiB"},"kong_healthchecks":{"allocated_slabs":"0.04 MiB","capacity":"5.00 MiB"},"kong_cluster_events":{"allocated_slabs":"0.04 MiB","capacity":"5.00 MiB"},"kong_core_db_cache_miss":{"allocated_slabs":"0.08 MiB","capacity":"12.00 MiB"},"kong_core_db_cache":{"allocated_slabs":"0.77 MiB","capacity":"128.00 MiB"},"prometheus_metrics":{"allocated_slabs":"0.04 MiB","capacity":"5.00 MiB"},"kong_db_cache":{"allocated_slabs":"0.76 MiB","capacity":"128.00 MiB"},"kong_rate_limiting_counters":{"allocated_slabs":"0.08 MiB","capacity":"12.00 MiB"}}},"server":{"connections_writing":1,"total_requests":1362,"connections_handled":1321,"connections_accepted":1321,"connections_reading":0,"connections_active":1,"connections_waiting":0}}

Source: Kong Ingress Controller Design

Konga Administration UI

$ helm install --name konga https://github.com/pantsel/konga/raw/master/charts/konga/konga-1.0.0.tgz

$ export KONGA_URL="http://$(kubectl describe svc konga | grep Endpoints: | awk '{print $2}')"

$ sensible-browser $KONGA_URL Once Konga is deployed and showing up in the browser, create a local administrator account (ex. admin/adminadminadmin), a connection to the kong-admin API service http://kong-kong-admin.kong.svc.cluster.local:8001 and finally hit the activate button.

When activated, the admin UI will reveal new menu items, and if any non-segmented ingress/service objects.

Clean the environment

We are done with our evaluation of Kong.

$ kubectl -n default delete -f petstore-ingress.yaml $ helm del --purge konga $ helm del --purge kong $ kubens default $ kubectl delete namespace gloo

What is Gloo

Gloo is an open-source cloud-native API Gateway and Ingress Controller built on Envoy Proxy. It is developed by Solo.io, a nextgen vendor that is focusing on Kubernetes, Microservices, Service Mesh and Serverless architecture.

Gloo comes with a dedicated client tool that is very handy for setup and configuration: glooctl

It uses Kubernetes custom resources to adopt a comprehensive approach consisting of 3 main layers:

- Gateway objects help you control the listeners for incoming traffic.

- Virtual Services objetcs allow you to configure routing to the exposed API.

- Upstream objects represent the backend services.

It owns a couple of innovative sweetspots, indeed it can:

- Route requests directly to Functions, which can be a serverless Function-as-a-Service (FaaS) e.g. AWS Lambda, Azure Functions, or a custom API call.

- Auto-discover APIs from IaaS, PaaS/FaaS providers, Swagger, gRPC, and GraphQL.

- Integrate with top open-source projects incl. Knative, OpenTracing, Vault.

Source: Gloo Routing documentation

The functional split between Open-Source and Enterprise versions.

Source: Gloo Product description page

As we can see, the Open-Source version misses at least all Security features, the Developer Portal and the write-access to use the administration UI which is more or less a YAML config editor and validator.

Setup Gloo

Unlike Kong, Gloo integrated in Kubernetes by default. 2 different deployment modes are possible:

- Gloo Ingress mode scans for Ingress objects (like Kong Ingress Controller). Gloo offers no real added-value to this approach and therefore does not recommend it.

- Gloo Gateway mode discovers Service objects. The Gloo "way" to Cloud Native that can integrate with Service Mesh.

Although the easiest way to setup Gloo is to use its client tool, we will go for a Helm chart release instead, so that we can better control and understand the different settings.

$ kubectl create namespace gloo $ kubens gloo $ helm repo add gloo-os-with-ui https://storage.googleapis.com/gloo-os-ui-helm $ helm repo update $ helm install --name gloo gloo-os-with-ui/gloo-os-with-ui \ --set ingress.enabled=false,gateway.enabled=true,crds.create=true \ --set gloo.gatewayProxies.gatewayProxy.readConfig=enabled \ --version 1.4.0

The Helm release starts 5 pods and 7 containers:

- proxy: Envoy endpoint listening to all incoming trafic on TCP ports 8080/8443.

- gateway: Validates and translates Gateway, Virtual Service, and RouteTable custom resources into a Proxy custom resource.

- gloo: Validates Proxy custom resource and creates an Envoy configuration.

- discovery: Service discovery server.

- api-server: Administration API, Administration UI, gRPC proxy.

Gloo API Discovery

We will now use Gloo client for any further high-level interactions with the Gateway.

$ wget https://github.com/solo-io/gloo/releases/latest/download/glooctl-linux-amd64

$ chmod +x glooctl-linux-amd64 && mv glooctl-linux-amd64 ~/.local/bin/glooctl

$ glooctl -n gloo version

Client: {"version":"1.4.1"}

Server: {"type":"Gateway","kubernetes":{"containers":[{"Tag":"1.4.0","Name":"grpcserver-ui","Registry":"quay.io/solo-io"},{"Tag":"1.4.0","Name":"grpcserver-ee","Registry":"quay.io/solo-io"},{"Tag":"1.4.0","Name":"grpcserver-envoy","Registry":"quay.io/solo-io"},{"Tag":"1.4.1","Name":"discovery","Registry":"quay.io/solo-io"},{"Tag":"1.4.1","Name":"gateway","Registry":"quay.io/solo-io"},{"Tag":"1.4.1","Name":"gloo-envoy-wrapper","Registry":"quay.io/solo-io"},{"Tag":"1.4.1","Name":"gloo","Registry":"quay.io/solo-io"}],"namespace":"gloo"}}

$ glooctl -n gloo get upstreams | head -10

+---------------------------------+------------+----------+--------------------------------+

| UPSTREAM | TYPE | STATUS | DETAILS |

+---------------------------------+------------+----------+--------------------------------+

| default-kubernetes-443 | Kubernetes | Accepted | svc name: kubernetes |

| | | | svc namespace: default |

| | | | port: 443 |

| | | | |

| default-petstore-service-80 | Kubernetes | Accepted | svc name: |

| | | | petstore-service |

| | | | svc namespace: default |As we can see, our petstore-service from the default namespace was discovered, without even having to add anything else like an Ingress. Since our services come with Swagger documentation, we may even want to activate fine-grained discovery of resources at namespace level:

$ kubectl label namespace default discovery.solo.io/function_discovery=enabled

As per the Hello World example, we will add a routing rule.

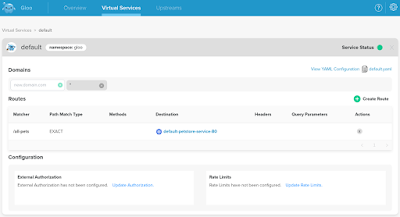

$ glooctl -n gloo add route \ --path-exact /all-pets --prefix-rewrite /v3/store/inventory \ --dest-name default-petstore-service-80 --dest-namespace gloo +-----------------+--------------+---------+------+---------+-----------------+-------------------------------------+ | VIRTUAL SERVICE | DISPLAY NAME | DOMAINS | SSL | STATUS | LISTENERPLUGINS | ROUTES | +-----------------+--------------+---------+------+---------+-----------------+-------------------------------------+ | default | | * | none | Pending | | /all-pets -> | | | | | | | | default.default-petstore-service-80 | | | | | | | | (upstream) | +-----------------+--------------+---------+------+---------+-----------------+-------------------------------------+

This creates the VirtualService default or the one specified with --name if not exists. With that, the actual API is now able to accept external requests:

$ curl -H "Host: petstore.host" -H "api_key: special-key" \

"$(glooctl -n gloo proxy url)/all-pets"

{"sold":1,"pending":2,"available":7} Gloo Administration APIBecause the API is based on gRPC, the user should access it using Gloo client only. Since we use Flux operator for any modification to our productive cluster and since glooctl is not able to simulate resource creation, we have to clarify wether a direct access can be approved for automation and if yes, if we need to retro-store VirtualService objects as follows. $ glooctl get virtualservice default --output kube-yaml

As workaround we may also invoke the API from the CI-Pipeline using a gRPC client (ex. grpcurl).

Gloo Administration UI$ sensible-browser $(kubectl describe svc apiserver-ui \

| grep Endpoints: | awk '{print $2}')Clean the environment

We are done with our evaluation of Gloo.

$ glooctl -n gloo delete virtualservice default $ helm del --purge gloo $ kubectl delete namespace gloo

Going further

We also started some detailed specification of different real-life use-case scenarios:

- Design-1st API release lifecylce

- Ingress route points to a mock API until the service API implementation is released

- Default routes

- In case no API version or wrong one is passed by the client in the URL or as URI parameter or header

- In case the API is (temporary) not available on the server

- Security

- Consumer IP whitelisting

- Relaxed inter-service security

- Request limits for free accounts

- Quota/Rate: Prevent clients executing too many requests, too often

- Size: Prevent uploading too large pictures or downloading too large logs

- Resource: Public API use should be read-only

- Operations

- Change management (CI/CD)

- Blue-Green and Canary deployment (LB)

- Track service availability (SLI/SLO) and trace requests (number, duration)

Take away

| Kong | Gloo | |

|---|---|---|

| Proxy kind | Nginx | Envoy |

| Discovery mode | Ingress | Gateway |

| Service Abstraction level |

Low | High (VirtualService) |

| Configuration level | High (Plugins) | Low |

| Administration | cURL, Konga (OS), Kong manager (EE) |

glooctl, Admin UI (read-only in OS) |

| Automation units | REST API, YAML | gRPC API, YAML |

| Open-Source fit | Demo and Test | Demo only (no security) |

| Governance | Developer Portal (EE) | Developer Portal (EE) |

Source code

Comments

Post a Comment